What is AnimaSentio?

AnimaSentio is an innovative research project focused on transforming the way we learn through interactive, AI-driven virtual human simulations. It integrates technologies like computer vision and emotion-detection algorithms to create digital human characters capable of engaging with users in real-time.

Led by Professor Jeasy Sehgal at Georgia State University, it focuses on bringing history to life by enabling interactions with 3D models of historical figures powered by conversational AI.

Example: Learning about the civil rights movement through interaction with a digital Dr. Martin Luther King Jr., or exploring ancient Egypt with a digital Cleopatra.

Project Goals

- Create personalized educational experiences for museum visitors

- Develop digital humans that respond to emotions, facial expressions, and voices

- Enhance engagement and knowledge retention through immersive interactions

Key Features

See AnimaSentio in Action

The Technology Behind AnimaSentio

The project integrates cutting-edge AI and computer vision technologies. Virtual humans are powered by Unreal Engine's animation tools, Convai, and NVIDIA platforms like ACE and NIMs for real-time interactions.

These beings are able to see and hear users, processing speech and facial expressions with AI models like NVIDIA NeMo and Elevenlabs for natural conversation.

Privacy is a priority, with all data securely discarded after processing.

Research Phases

- Developed emotional and empathic response systems using Unreal Engine 5.

- Integrated facial, head, and eye movements with computer vision algorithms (using OpenCV) to adjust gaze and expressions.

- Automating animations based on real-time computer vision—digital humans respond dynamically to user movement and expressions.

- Integrated AI-generated voiceovers for fluid conversations with facial animation synchronization (powered by NVIDIA ACE).

- Use of GPT-based conversational AI to allow real-time interactive dialogues with users.

- Expansion into other sectors (e.g., healthcare, education) and the development of new interactive learning experiences.

Project Renders

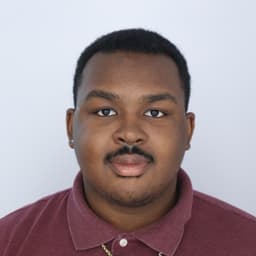

Meet the Team

Impact and Future Goals

AnimaSentio aims to revolutionize education with personalized, immersive learning experiences. The project seeks to enhance user engagement and knowledge retention in museums with AI-driven, real-time conversations.

Future plans include expanding the library of historical figures, partnering with museums, and exploring applications in healthcare, digital therapy, and customer service.

Our Vision

The goal is to make learning and customer interactions more engaging and meaningful by extending virtual human capabilities to new sectors.